Hardware Video Transconding on the Raspberry PI 4 and AMD Graphics

Whenever a project starts with the thought “How hard can it be?”, it usually turns out much more extensive than initially estimated. I was starting with investigations on how to build a Plex alternative optimized for the Raspberry PI 4 and other efficient x86_64 based platforms. The reason behind this is to support hardware platforms which are not supported by Plex. Plex does not support hardware transcoding on the Raspberry PI 4 and on AMD graphics hardware running Linux. That’s also where I started off, with the investigation on how to make use of hardware video transcoding on the mentioned platforms.

Licenses, Patents and APIs

To get the most annoying aspects of this topic out of the way, let’s directly start with those. When working with audio and video one also has to consider the legal aspects. Many codecs are patented and require license fees being paid. The software and APIs available are licensed under varying open source licenses which could have an effect on ones personal project depending on its licensing model.

Starting with the audio side of things. A lot of codecs today can be transcoded without paying any licensing fees. The patents protecting MP3 and AC3 have expired some time ago. More modern open codecs like libopus provide even better compression without being affected by any patents. Meaning encoding audio shouldn’t be a problem. Also usually the performance of platforms is good enough for audio transcoding to be executed in software. The only challenge might be to figure out whether one is allowed to easily decode newer high resolution formats like Dolby TrueHD and DTS-HD. There are decoders available in ffmpeg, but I couldn’t find any information on the patent situation of those audio codecs. In the worst case it would be necessary to simply copy the audio stream.

Coming to the actual content of this blog post, video codecs. The by far most common video codecs are MPEG-2, h.264 and hevc (h.265). The patents for MPEG-2 have all expired, meaning DVD rips can be freely transcoded. The other two still have valid patents and if one would do video transcoding in software, one would have to pay the licensing fees. Meaning using libx264 as a fall-back might sound like a good idea, but really isn’t. Fortunately for us when utilizing hardware codecs the vendor has to pay the license fees and we can freely make use of those. A good explanation of the situation can be found here, summed up by Jina Liu. This basically tells us that AMD, Intel and the Raspberry PI Foundation pay the license fees for their products.

Meaning by cleverly choosing the underlying technology we can avoid paying patent fees! Now we just have to figure out which APIs to use.

- vaapi is an open source (MIT License) API developed by Intel. Both AMD and Intel support this API very well. It is possible to decode and encode h.264, vp9 and h.265 content in LDR and HDR.

- vdpau was the API favoured by Nvidia. Although it does not seem to be well supported these days. Nvidia seems to focus on their proprietary api nvenc and nvdec.

- nvenc/nvdec are proprietary implementations and part of the official Nvidia binary driver. The advantage of this API is that it’s available on Windows as well, besides the fact that it offers way more features than their older vdpau counterpart.

- Quick Sync is the name for the encoder and decoder blocks shipped with Intel CPUs. It is available through the Intel Media SDK and there is also a vaapi wrapper as well.

- v4l_m2m: This is one of the APIs which is favoured by many ARM embedded boards. The implementation for the Raspberry PI 4 is still in development but piece by piece the functionality is merged into the upstream projects (kernel, ffmpeg,…). On the Raspberry PI 4, this API is used to access the h.264 encoder and decoder functionality.

- v4l2 request api: The newer stateless sibling of v4l2_m2m. It is required to access the h.265 decoder on the Raspberry PI 4. [Update on 28.10.2020: added note about v4l2 request api]

- omx/mmal: For the sake of completeness: These are the old APIs supported on the different Raspberry PI versions. OMX is short for OpenMAX which is a deprecated standard for embedded systems. MMAL is a library on top of this standard, developed by Broadcom. Using these libraries might be necessary if one works with older Raspberry PIs. I recommend focusing on the recent versions and APIs instead, even if they are not quite ready yet.

Of course directly using one of these APIs only makes sense in special situations where the source material does not vary (e.g. security applications, surveillance hardware,…). In a case like Plex one would likely prefer ffmpeg or gstreamer not only because they support a much wider range of video codecs and abstract those different APIs, but because they also handle parsing all the container formats (mkv, mp4,…). The only disadvantage of those libraries is that both of these are licensed under LGPL which might be an issue for some proprietary use cases.

FFMPEG

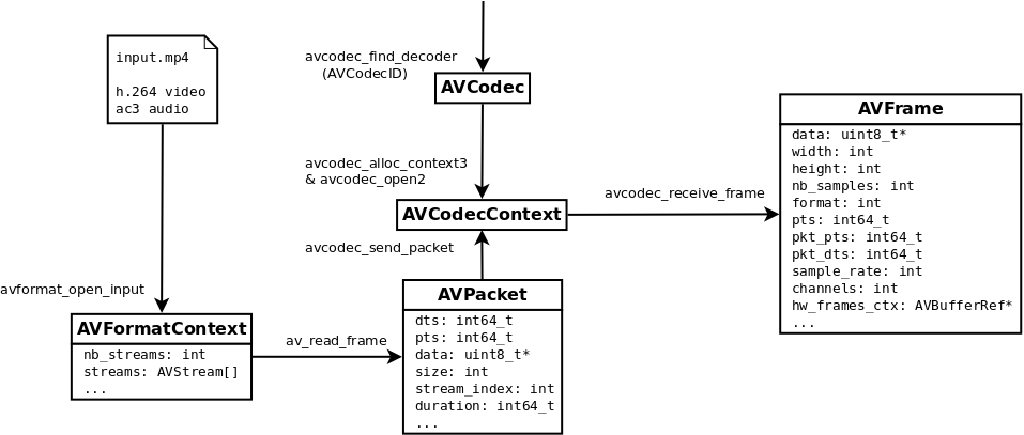

Ffmpeg is well known as a utility for video and audio transcoding. In fact ffmpeg itself is just a tool wrapping the functionality of its framework components. Those components are the libraries we have to work with: libavformat, libavcodec, libavutil and libswscale amongst others. The resources I used were mostly the API documentation and their examples. What makes those resources a bit more complex (in my opinion) is that they are written in C and not as nicely structured as one might be used to from object oriented programming languages. The diagram below should give a simple overview of the decoding process and show which components one has to work with.

Decoding a video file is handled by libavformat. By calling avformat_open_input we initialize this process and get the information of the multiple streams in form of an AVFormatContext instance. Following this the different packets stored in the container can be read from the file by calling av_read_frame. The decoding process is initialized by retrieving a codec handle and initializing an instance of AVCodecContext. Packets can then be sent to the decoder by calling avcodec_send_packet. It’s only important to create a decoder for all the streams one wants to decode and pass the packet to the correct decoder, defined by the stream_index in the packet. With calling avcodec_receive_frame on the context one can retrieve the decoded video frames or audio samples.

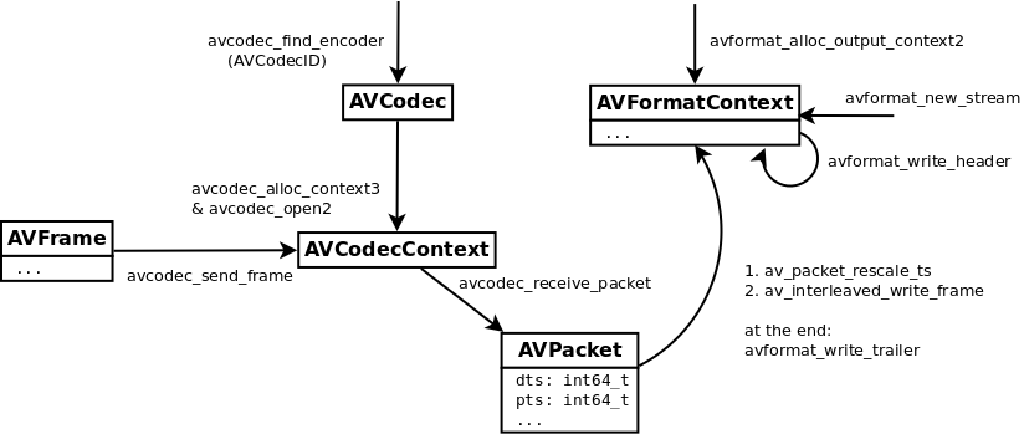

The encoding process involves the same components again. There are just a few different functions we’ve got to call to correctly initialize them. One other slightly complex part is the correct initialization. This is what I left out in the diagrams, setting up the codec and stream parameters. Such information can be taken from the examples for demuxing, transcoding and muxing. As you will see, many parameters can be taken from the input stream or codec contexts directly and don’t have to be manually specified.

The Raspberry PI 4: v4l2_m2m

In order to utilize this API, the Raspberry PI 4 needs to be running a recent version of the linux 5.4 kernel series maintained by the Pi Foundation. Fortunately Ubuntu 20.04 arm64 already runs a reasonably up to date version of this kernel. Details can be found in the ubuntu-raspi-focal repository on kernel.ubuntu.com. If you follow the logs you will see that the bcm2835-codec and the rpivid kernel modules are enabled. The first implements a wrapper for the v4l2_m2m api and calls Broadcom’s mmal. It offers h.264 encode and decode for up to 1080p video streams. The latter is the new driver offering the h.265 decode functionality for video streams with, through the v4l2 request api, resolutions of up to 2160p. H.265 encoding is not supported by the SoC.

Also some fixes and changes for ffmpeg are not yet upstreamed completely. Meaning one has to compile a custom version of ffmpeg. I tried the official upstream repository (commit #ea8f8d2) as well as the development branch recommend in the raspberrypi-forum (commit #0e26fd5) without success. The official repository resulted in some green frames and the development branch in the recommended repository just segfaulted. The only version I successfully used was the older ffmpeg version shipped with the raspbian distribution. It is available on github. Just remember to apply the fixes in the debian/patches directory.

sudo apt build-dep ffmpeg

git clone https://github.com/RPi-Distro/ffmpeg.git

cd ffmpeg

patch -i ./debian/patches/0001-avcodec-omx-Fix-handling-of-fragmented-buffers.patch -p 1

patch -i ./debian/patches/ffmpeg-4.1.4-mmal_6.patch -p 1

patch -i ./debian/patches/fix_flags.diff -p 1

./configure --prefix=/opt/ffmpeg-rpi --enable-shared --enable-gpl --enable-libx264 --enable-nonfree --disable-cuvid

make -j4

sudo make install

After installing a working version of ffmpeg I adapted my test application. Fortunately the v4l2_m2m support in ffmpeg is quite easy to utilise. There is no need to enumerate devices and copy frames between device and host. It is enough to call avcodec_find_decoder_by_name and avcodec_find_encoder_by_name with “h264_v4l2m2m” as an argument.

This worked fine for all my tests as long as the encoder frame size did not reach 1080p. In those cases ffmpeg tried to allocate a buffer of 1088 lines for some reason and failed to initialize the encoder with the error message “VIDIOC_STREAMOFF failed on output context”. I can only assume that this reaches the limits of the encoder hardware. I would guess that the encoder logic also has some requirements for the frame height, for example being a multiple of 16(or 32…) due to some optimized algorithms (DCT, motion estimation,…?). Most likely cutting of some lines with libswscale would solve this issue.

With h.265/hevc I had less luck, I was able to get the kernel devices to show up on the filesystem by adding “dtoverlay=rpivid-v4l2” to /boot/firmware/usercfg.txt but ffmpeg still complained about not finding a compatible device even after making sure that the v4l2 request api instead of v4l2_m2m is used. I will definitely have to give this another try later on.

Update 31.12.2020: I did some more tests today with the v4l2 request API and managed to get the hevc decoder up and running. You can read more about it in a newer blog post.

AMD Graphics:: vaapi

In contrast to v4l2_m2m and its Raspberry PI 4 driver implementation libvaapi has been around for a lot longer. The drivers and the support in ffmpeg had time to mature quite a bit. Recent Ubuntu and Fedora releases should therefore provide an out-of-the box working experience. Only on Fedora the rpmfusion repositories have to be enabled first in order to install ffmpeg.

Unfortunately since vaapi also supports dedicated devices, one needs to handle a few additional steps. Initially one needs to initialize a hardware device context which is shown in this example and pass it to the decoder. When working with frames, one also needs to copy back and forth the data between host and device. This is possible by combining av_hwframe_get_buffer and av_hwframe_transfer_data. Additionally one needs to create a hardware frames context for the encoder which is also shown in the example above. There is also a shortcut for this, if the only goal is to reencode the video stream with a different bitrate. In the transcode example the hardware frames context from the decoder is reused. While initially a bit more effort, this API works quite well. On my AMD systems I was offered encode and decode functionality for h.264 and h.265. I did these tests with a dedicated grapics card (Radeon RX 570) as well as an integrated GPU (Ryzen 3 2200G). The h.265 encoder surprised me positively. Reencoding the open movie Sintel (~15min) from h.265 to h.265 took about 3:50min on the 2200G and about 2:55min on the RX 570. Recalling how tough h.265 is in software, these results are quite satisfying.

Verdict

While I was hoping to get better results on the Raspberry PI 4 over all I’m quite happy with the results. It should prove that it is possible to use hardware transcoding based on an open source software stack. I will of course follow further developments in the Raspberry PI 4 community.

Positively surprising was the well implemented support of vaapi on AMD hardware. I might use these hardware codecs in the future, although I will likely not implement a Plex alternative just yet. As hinted in the beginning, it would take a bit more time than I have available at the moment. Who would have thought ;)?